If you had built a scale-out storage cluster, why would you not make it usable as a filer? One thing that we know for sure is that data storage requirements will keep growing. A scale-out storage platform that allows you to buy capacity progressively as your needs grow might be a great solution. Having integrated data protection will be a great bonus. The Cohesity name for file sharing from their cluster is Views. A View can be an NFS or SMB file share and can be an S3 compatible object-store. The default is for the View to be all three, although in this mode the S3 store is read-only with object ingestion via the file share interfaces. If a View is setup as S3 only, then it is read/write accessible via S3.

Disclosure: This post is part of my work with Cohesity.

If you would rather see this as a video, take a look here:

Create a View

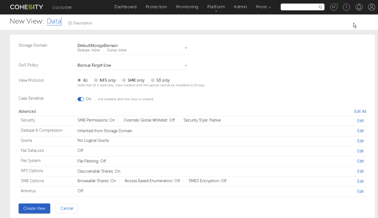

Views are managed under the “Platform” menu in the Cohesity console. A new View needs a name, which is also the name of the SMB share if SMB is enabled. Other sharing options are NFS and S3; I chose to use the “All” option, which means that the S3 access is read-only. I have seen object storage where the content was ingested through a file share and then consumed through the object interface Read-only S3 object storage may not be an issue.

Another primary option is the QoS policy for storing data on this View. The default is “Backup Target Low,” which will restrict the performance of small block IO as it will mostly use the hard disk storage tier. I chose “TestAndDev High,” which will use more SSD and deliver better transactional performance for small files on the share.

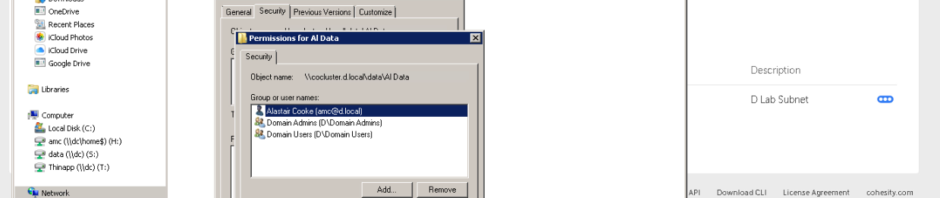

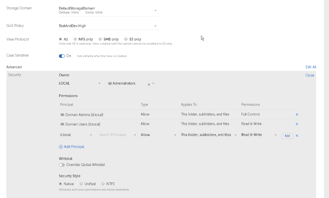

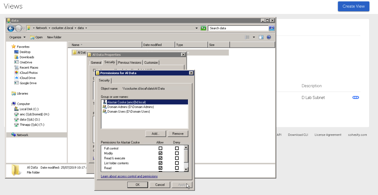

File system security is available since my Cohesity cluster is joined to my Active Directory I could use AD groups to devolve control. I gave my Domain Admins group full control and Domain Users modify access. Removing the default of Everyone having Full Control is a good idea, “Everyone” includes non-authenticated users.

The default capacity limit for a View is the available space in the Storage Domain. You can also set a lower maximum to limit the impact of a View on the capacity for other functions, such as data protection.

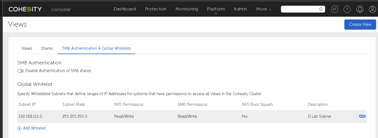

Despite having allowed my user account access to the file system, I was still not able to access the SMB share and was prompted for authentication. It turns out that there is another layer of security; Cohesity uses network white lists to allow access to shares. I added my lab network subnet and was able to access the share. Notice that on each subnet you have the option to turn off “root squash” for NFS shares. NFS servers, by default, do not allow the root user to access shares. VMware ESXi servers access NFS datastores as root. To enable ESXi servers to access the NFS shares, you must set NFS Root Squash to No for the subnets containing the ESXi servers.

Once the subnet was whitelisted, I could access the SMB share through my cluster’s DNS name. I could also set security on individual folders inside the share, just the same as on a Windows file server. I created a subfolder and dumped a few Gigabytes of files on the share.

Only a few minutes from start to finish and I have a file share available. The second View only took one minute to configure. Once created, administration activities are much like any other file share.

Protect the View

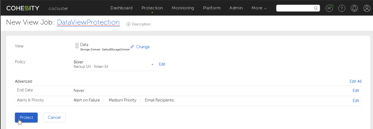

Once there was some data on the share/View, it was time to think about data protection for the View. Happily, setting up a Protection Job for the View is on the context menu of the View (also on that menu are the paths for the shares.)

Like all Protection Jobs, you need to name this one and select a Protection Policy before you click Protect.

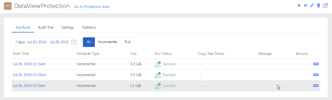

The next day, there were my protection runs showing successful local protection. I highly recommend having remote copies as well, to protect against someone deleting the Storage Domain, which contains both the View and the protection copies.

Local data protection was quick to set up and uses the existing data protection policies, which should reflect your data governance requirements. Local data protection isn’t enough; check the Warning below.

Restore to a View

You can restore files to the View; I chose to search for the View name.

Once you have the right View, you can browse the directory tree and select items to download or add to the “cart” to restore back in place.

If you want to restore back into the View, you will need to provide some file system credentials and choose whether to overwrite existing objects.

The restore is as simple as ever on Cohesity. Global search is a huge help in locating the object to be restored.

Warning – Segregation of Duties

One aspect to be aware of is that storing files on your backup platform may not match your data governance requirements. The issue is that the files and their backups share the same platform, and so a single administrative error may erase the original files and the data protection copies. Imagine the result if I inadvertently deleted the Storage Domain on my cluster, now both the data and the backups are gone. I suggest that replication should be part of the protection policy for any Views that contain valuable data. Replication to another Cohesity cluster, or to public cloud object storage removes the single point of failure. If you need to use Views a lot, you may choose to build a separate Cohesity cluster just for the Views. The dedicated Views cluster should have a short retention period for backups and then have replication to your data protection Cohesity cluster with longer retention. I am also a fan of sending archives to AWS Glacier; the slow pace of data operations on Glacier allows more time to detect errors.

© 2019, Alastair. All rights reserved.

RSS - Posts

RSS - Posts